In this tutorial, you will know how to upload a zip file and unzip the file to Google Colab for doing training purpose of SRCNN model. We use Google Colab Jupyter Notebook (IPython) to train the model.

Step 1: Download image dataset that you prefer to use (e.g. yang91.zip dataset is used)

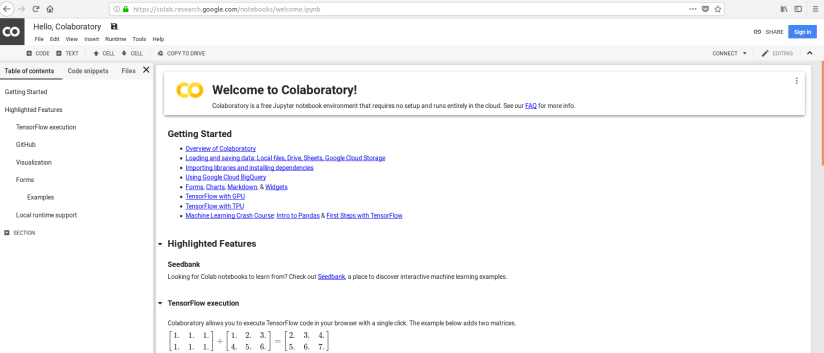

Step 2: Go to Google Colab address (https://colab.research.google.com) and sign in your Gmail account to access Google Colab.

After sign in, you will get an interface like below:

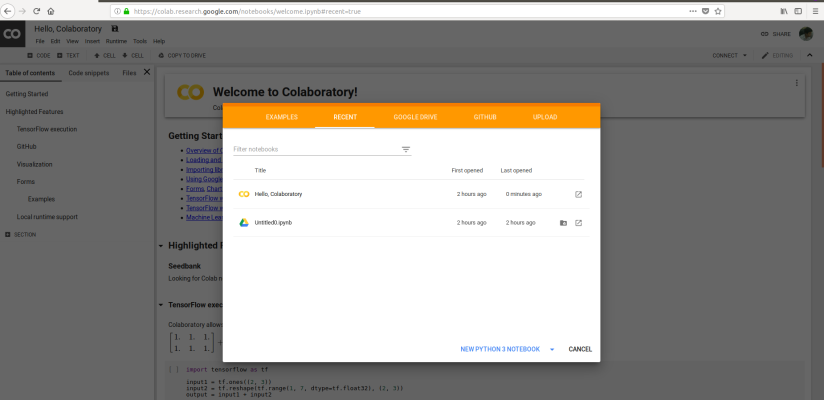

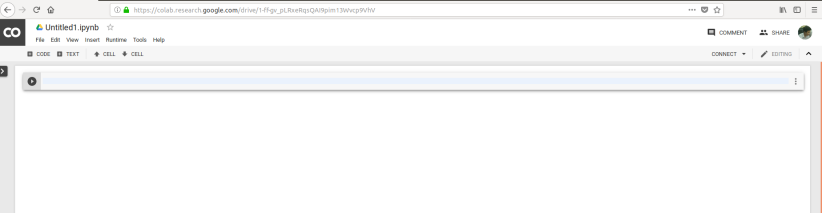

Then, click on NEW PYTHON3 NOTEBOOK

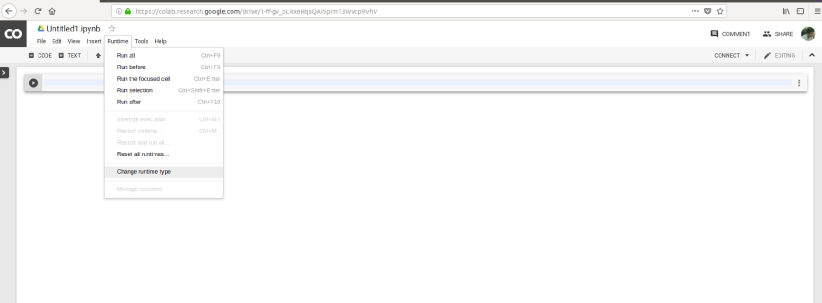

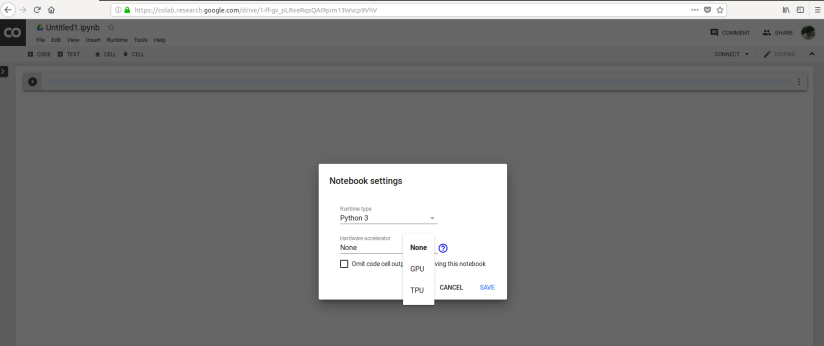

Select your runtime environment (e.g. None, GPU or TPU)

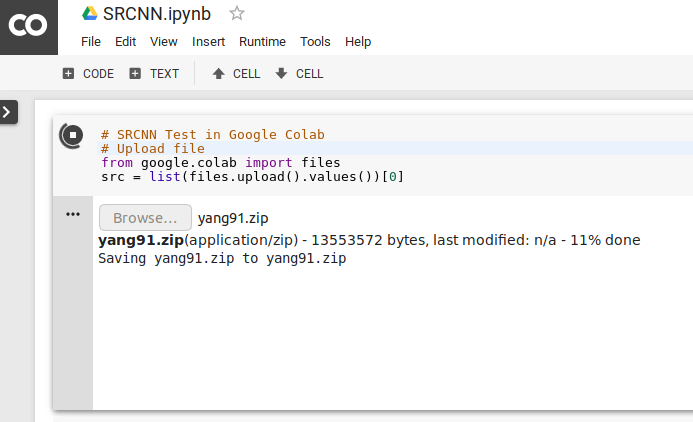

Step 3: Upload zip file and unzip into Google Colab (Write code below in Google Colab Jupyter Notebook)

# SRCNN Test in Google Colab # Upload zip file from google.colab import files rc = list(files.upload().values())[0] !unzip yang91.zip

Run code above in Google Colab, then browse your zip file directory as the figure below:

Step 4: Code

# Import libraries

import keras.backend as K

from keras.models import Sequential, Model

from keras.layers import Dense, Conv2D, Activation, Input

from keras import optimizers

from keras.models import load_model

import numpy as np

import scipy.misc

import scipy.ndimage

import cv2

import math

import glob

import matplotlib.pyplot as plt

# Build SRCNN model

img_shape = (32,32,1)

input_img = Input(shape=(img_shape))

C1 = Conv2D(64,(9,9),padding='SAME',name='CONV1')(input_img)

A1 = Activation('relu', name='act1')(C1)

C2 = Conv2D(32,(1,1),padding='SAME',name='CONV2')(A1)

A2 = Activation('relu', name='act2')(C2)

C3 = Conv2D(1,(5,5),padding='SAME',name='CONV3')(A2)

A3 = Activation('relu', name='act3')(C3)

model = Model(input_img, A3)

opt = optimizers.Adam(lr=0.0003)

model.compile(optimizer=opt,loss='mean_squared_error')

model.summary()

# Create function to generate High Resolution from interpolation technique

# to pass in SRCNN model

def modcrop(image, scale=2):

if len(image.shape) == 3:

h, w, _ = image.shape

h = h - np.mod(h, scale)

w = w - np.mod(w, scale)

image = image[0:h, 0:w, :]

else:

h, w = image.shape

h = h - np.mod(h, scale)

w = w - np.mod(w, scale)

image = image[0:h, 0:w]

return image

def create_LR(image,scale):

label_ = modcrop(image, scale)

# Must be normalized

label_ = label_ / 255.

input_ = scipy.ndimage.interpolation.zoom(label_, (1./scale), prefilter=False)

input_ = scipy.ndimage.interpolation.zoom(input_, (scale/1.), prefilter=False)

return input_

# Call data path in Google Colab and read all files

path = 'yang91/'

files_y = glob.glob(path + '*.bmp')

# Split data into 2 parts, 85 images for training, the rest is for validation

trainfiles = files_y[:85]

valfiles = files_y[85:]

# Initial parameters setting

img_size = 32

stride = 16

scale = 4

X_train = []

Y_train = []

X_val = []

Y_val = []

# Extract patch image for training

for file_y in trainfiles:

tmp_y = scipy.misc.imread(file_y,flatten=True, mode='YCbCr').astype(np.float)

tmp_X = create_LR(tmp_y,scale)

h,w = tmp_y.shape

for x in range(0, h-img_size+1, stride):

for y in range(0, w-img_size+1, stride):

sub_input = tmp_X[x:x+img_size, y:y+img_size].reshape(img_size,img_size,1)

sub_label = tmp_y[x:x+img_size, y:y+img_size].reshape(img_size,img_size,1)

X_train.append(sub_input)

Y_train.append(sub_label)

# Extract patch image for validation

for file_y in valfiles:

tmp_y = scipy.misc.imread(file_y,flatten=True, mode='YCbCr').astype(np.float)

tmp_X = create_LR(tmp_y,scale)

h,w = tmp_y.shape

for x in range(0, h-img_size+1, stride):

for y in range(0, w-img_size+1, stride):

sub_input = tmp_X[x:x+img_size, y:y+img_size].reshape(img_size,img_size,1) # [32 x 32]

sub_label = tmp_y[x:x+img_size, y:y+img_size].reshape(img_size,img_size,1) # [32 x 32]

X_val.append(sub_input)

Y_val.append(sub_label)

# Convert list to array

X_train = np.array(X_train)

Y_train = np.array(Y_train)

X_val = np.array(X_val)

Y_val = np.array(Y_val)

# Start training the model

model.fit(X_train, Y_train, batch_size = 32, epochs = 1, validation_data=(X_val, Y_val))

# Save the model

model.save('scale4.h5')

# Download model from Google colab to your drive

from google.colab import files

files.download('scale4.h5')

Congratulation, you succeed it!